Am I a machine learning dinosaur?

A personal paradigm shift

On why I, data science dinosaur, am suddenly more excited about AI than ML.

trying out prompt engineering

This past week I worked through DeepLearning.AI’s ChatGPT Prompt Engineering for Developers course, as part of the foundation I need for using GenAI language models at my day job. The course is only about an hour long. I’d recommend it to any developers or data scientists wondering how they might use prompt engineering effectively and for what purpose. Even if you’re not a developer, you might find it enlightening. All the live code is there for you in an online Jupyter notebook. Very cool! 😎

What blew my mind about what I learned was that, if this works, it makes old-style machine learning obsolete, at least in the natural language processing (NLP) space, which is where I mainly put my efforts these days. At TMP we have so much text data, after all, in the form of resumes and job descriptions.1

After that I started using some of what I learned to improve upon the prompts I was using for a structured NLP information extraction task, extracting skills from resumes and identifying which ones are most important to the job. This is something for which off-the-shelf solutions already exist but they don’t work as well as they might, and they are expensive.

As I iterated on prompts, I felt like I was describing a machine learning model in English. So when I saw Musk’s tweet on the same day, it resonated:

To me, it seems like prompt engineering is natural language natural language processing: NLNLP.

paradigm shift

My initial reaction to GenAI has been, “great, but my use cases don’t involve generating rambling, sycophantic, and possibly incorrect text responses. I still need my old ways of designing, training, and evaluating a model to do specifically what I want.”

But the prompt engineering course showed me ways of doing everything I wanted to do using ChatGPT instead of using my beloved bespoke NLP models. Now I can see what the prompt engineering ruckus is about.

Philosopher of science Thomas Kuhn proposed that science is marked by paradigm shifts (scientific revolutions) where new overarching theories take place of the old. Approaches and frameworks under the new paradigm don’t make any sense if you’re working under the old one. You can’t translate the language and theories from one paradigm to the other; they are incommensurable.

The shift from ML to AI in NLP feels a bit like a Kuhnian scientific revolution.

I don’t think that the AI approach (natural language description of a natural language processing task) and the ML approach (translating a problem into a mathematical expression of it and then using optimization algorithms on the matrices to produce a compressed representation a.k.a. model) are entirely incommensurable. They can work together, and a data scientist who is able to switch between paradigms depending on the state of the art in AI versus what you can do with ML will be the most productive one.

But ten years down the line, maybe my ML skills will be entirely obsolete. Fortunately, I intend to retire within that time frame! 👵🏻

is AI disrupting ML?

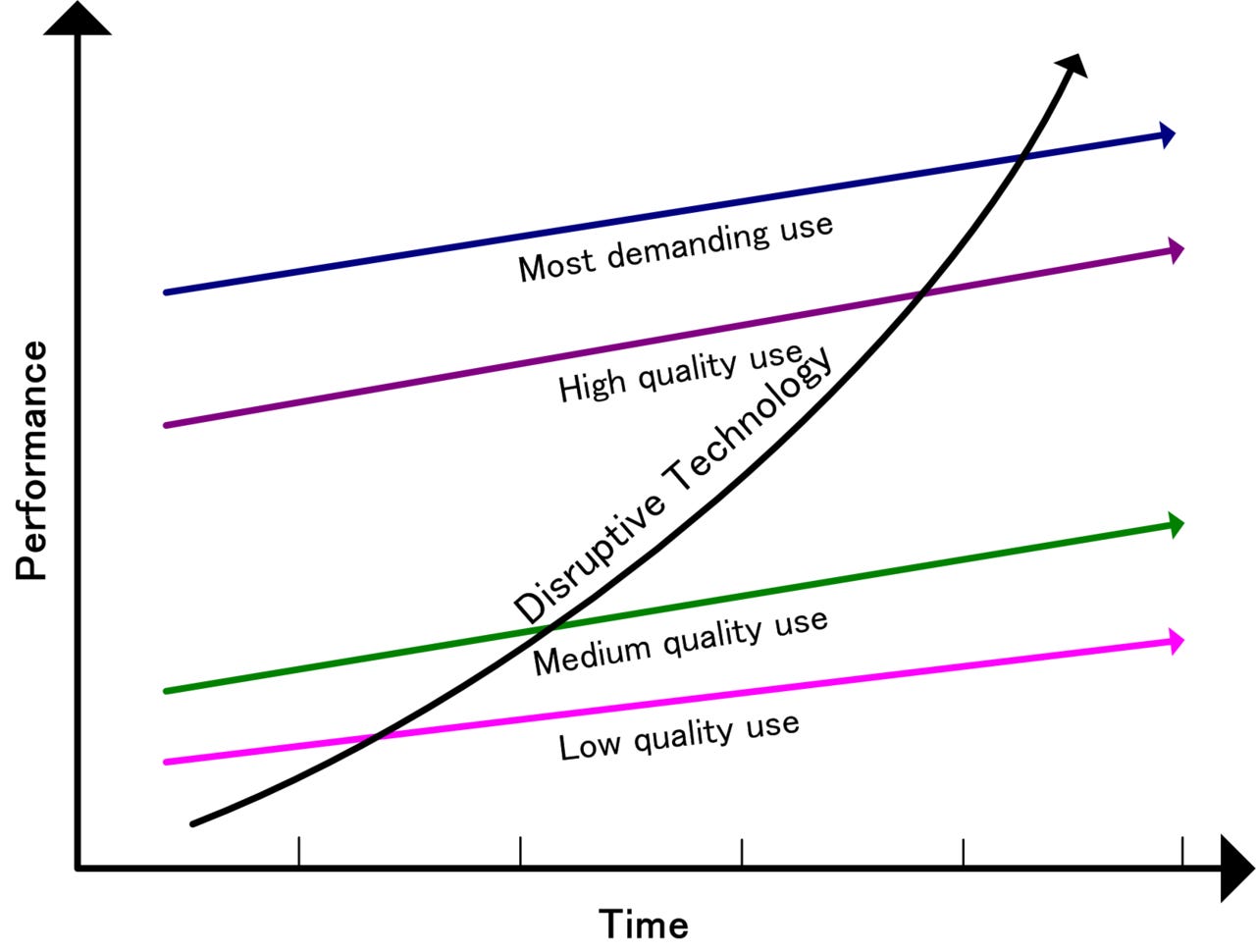

Maybe we could say that AI is disrupting the ML space, in the Clayton Christensen sense. Consider old-school ML-based NLP as the incumbent. Right now, it provides acceptable performance for the most demanding uses. I can get better performance at key NLP tasks (such as skill extraction from a resume) by going through a laborious process of constructing a ground truth dataset, crafting features (including possibly using BERT or other LLM embeddings), designing a neural network architecture to do extreme multilabel classification to apply the skills, then training and deploying the model. Or maybe I just do some fine tuning of BERT or ChatGPT, either of which appeal to my “let’s train a model!” default task setting.

Instead of either of those, I can just describe to GPT-4 exactly what I want to do, and it does a passably good job without all that headache.2 While the performance isn’t as good (yet), it is so much easier. Plus, I expect it will eventually outpace the incumbent, and frameworks will be built around it to deal with any headaches it does involve.3

the dinosaurs

As a traditionally-trained and experienced data scientist and ML engineer, I expect I might have some challenges switching to the new paradigm. And I’m not the only one. I spoke with a founder at an AI startup yesterday. He was probably in his twenties, and had established his credentials as an ML developer at Facebook. When I explained to him my use case of extracting skills from resumes, he said, “standard NLP approaches would probably work pretty well for that.” He’s not wrong.

He and his company are focused on RAG (retrieval-augmented generation) as a key way to use LLMs. This is what many of his company’s clients are doing with GenAI. I thought to myself, “this guy, young as he is, might be a dinosaur like me” even though he’s in the LLM space.

I’m not that excited about RAG as of yet, but maybe I’m missing something, like I’ve been missing something with prompt engineering. “Let’s build a better search engine” just doesn’t get me all that fired up. It seems like it puts too much onus on human intelligence to figure out how to solve a problem. I suspect this ignorance of mine of the power of RAG will be upended during 2024.4

updating my terminology

I have resisted the urge so far to use the term AI to refer only to GenAI capabilities such as ChatGPT, as so many people seem to be doing these days. But I’m getting closer to making the terminological switch as I spend more time with prompts and less with setting up ML training jobs.

I’m personally deprecating the terms deep learning and machine learning.

“Deep learning” overemphasizes a particular tributary path to the current state, and a quality of “deepness” that seems more poetic than fundamental. “Machine learning” overemphasizes the machine-like aspect which I think is highly misleading. AI, I think, has been reified into sufficient meaningless that we can just use it without worrying about the inclusion of the word “intelligence” with all its unresolved philosophical baggage.'

I’m ready to go all out on the AI terminology, in fact already made moves that way in 2023. My title at work is Head of AI and Analytics and I lead the AI Engineering team (along with data analytics, which makes for a great combo of synergistic functions).

where to go from here

I find myself wondering what a transition from an ML (NLP) practice to an AI (NLP) practice will look like. Is it like a real Kuhnian paradigm shift, where the (data) scientists who did it the old way must retire and move on, allowing for (data) scientists who think in the new way to take over? Or can the dinosaurs survive and thrive in the new world?

In many ways, AI makes us experienced (old) data scientists suddenly more relevant. We don’t need to remember every last bit of Python syntax; ChatGPT can tell us what to do given a natural language description of a function. But how to design an AI/ML-driven feature that makes business impact or how to evaluate the results of a model in a way that you don’t fool yourself into thinking it works better than it does—these aren’t things that any GenAI chat capability will help much with, in its present form. This isn’t about crystallized versus fluid intelligence. It’s about having a broad and deep understanding of the practices and principles of a field in a way you can contribute important, useful capabilities.

Just like staff-level software engineers will not be replaced by AI any time in the near future (because AI can’t plan out a full system architecture, reason about all the restrictions the legacy code base puts on future work, or negotiate scope and deadlines with a product manager), data scientists will be augmented by AI for the near and medium-term future, not replaced by it.

Anne Zelenka is co-founder of Incantata AI. She also serves as Head of AI and Analytics at The Mom Project, a talent marketplace aimed at creating economic opportunity for moms and everyone else who wants a better work-life balance. These opinions are her own and do not represent those of TMP.

We do a lot of tabular data processing at TMP as well. There is no GenAI disruption of this space as of yet, and even neural nets don’t seem necessary. Tree-based methods work as well with far less tuning required.

There are still headaches, but they are novel headaches so they are more fun. For example: how do I convince GPT-4 to give me JSON output in exactly the format I need and not punt because it’s feeling lazy?

Though I agree that frameworks often limit speed of development and creativity. I would be reluctant to constrain my AI Engineering team with one.

Which is not to say I don’t appreciate all the interesting things you can do with vector retrieval, embeddings, and semantic search. I’m not saying RAG isn’t worthwhile, just doesn’t capture my attention like NLNLP does.