We’re at the stage of GenAI adoption where the first project every company builds is a chatbot. Yes, it is the most obvious way to quickly get started using “AI” (when you think of AI as ChatGPT, DALL-E, Sora, and other generative AI capabilities) but it’s probably not the most impactful way to go. Besides, it’s hard to make it safe.

As I move forward with using LLMs such as GPT-4 in production systems, I’m starting to recognize the power of retrieval-augmented generation (RAG) that everyone has been trumpeting (on my Twitter timeline at least). I thought it was just for question answering or other approaches that output paragraphs of text (including chatbots!), but really it’s a model for how you can use LLMs to improve upon almost any natural language processing pipeline: named entity recognition, structured knowledge extraction, text classification, and more. Ultimately LLMs are another way to do NLP, besides being useful coding assistants, editors for all your writing tasks, and rubbery ducky thought partners.🐤

what is retrieval augmented generation (RAG)?

Large language models like ChatGPT are part of a class of AI systems known as Generative AI a.k.a. GenAI. These models and systems can generate sophisticated output (text, images, video, etc) that before them only humans could create.

Retrieval-augmented generation uses additional data to improve upon responses that an AI gives. In the paper introducing it, there was more to it than just combining retrieval with generation. But here I’m just going to keep things pretty simple.

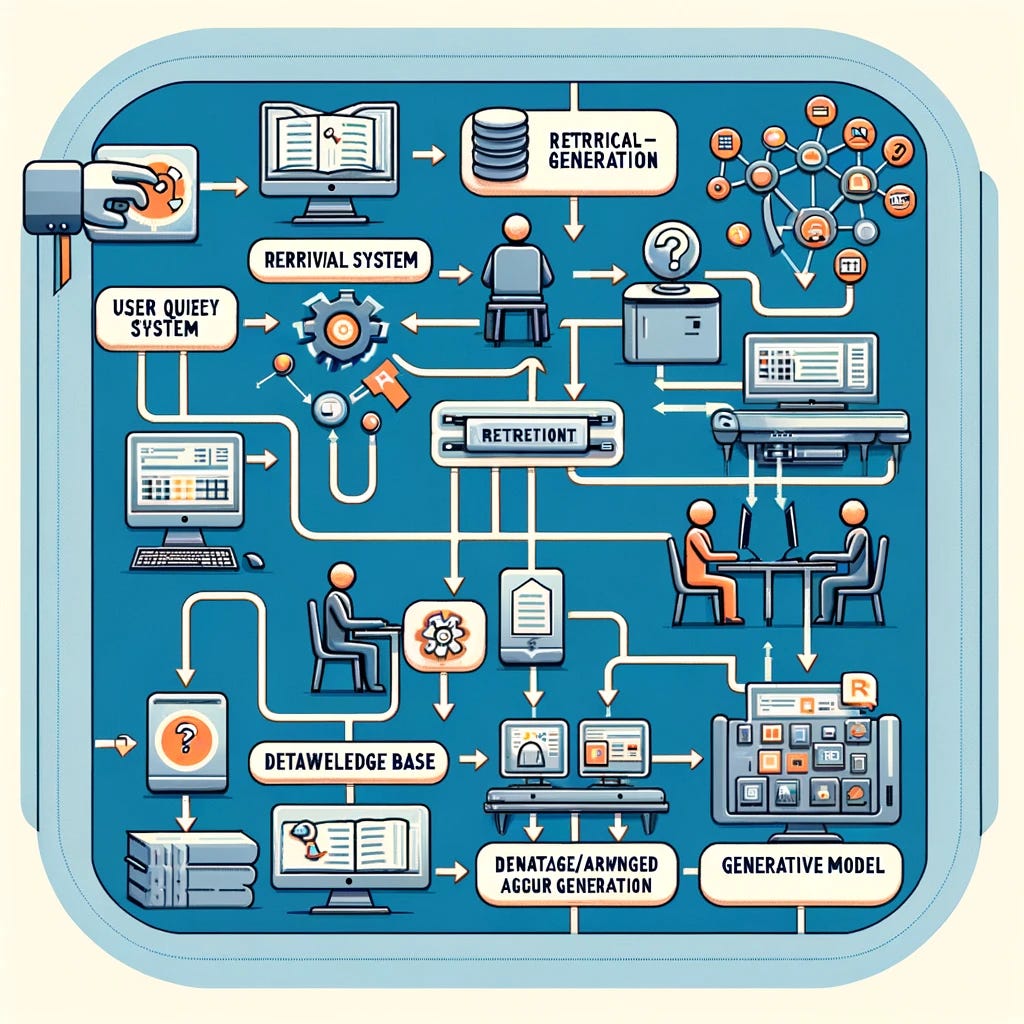

I asked ChatGPT with its image generation compatriot DALL-E to create a diagram showing the retrieval augmented generation process. Check it out:

Makes it pretty clear what’s going on? No? Sorry. 😂

The text that accompanied the image is a reasonable description of RAG:

The image illustrating the flow of retrieval-augmented generation (RAG) has been created. It outlines the process starting from a user input or query, through the retrieval of relevant information from a database or knowledge base, to the generation of a response by the generative model, culminating in the final output presented to the user.

Here’s a better diagram, from Leonie Monigatti’s excellent overview of RAG:

The key is that before you prompt your generative AI language model, you first retrieve information from your proprietary dataset, usually via an embeddings query hitting a vector database.1 The information retrieved is included in the prompt as context, to provide the language model with additional information on the fly. Presumably this should reduce hallucinations and provide more meaningful responses.2

beyond question answering

RAG-esque models that include retrieval via embeddings from a vector database are not limited to question answering and chatbot improvements, which are often the use cases described in introductions to the approach. You can also do more typical natural language processing tasks such as labeling free text, using labels from a predefined set or taxonomy.

As an example, consider this open source project xmc.dspy, eXtreme Multi-Label Classification (XMC) which can label incoming text with labels from taxonomies that have very many labels (e.g., 10,000+). The paper covers two examples, one of which is near to my heart: extracting skills from a job description and then normalizing them to a predefined taxonomy.

This approach expands upon the standard RAG approach to use language models before retrieval against a custom dataset in addition to after retrieval:

Xmc.dspy uses DSPy, a way of programmatically engineering prompts for LLMs. DSPy appeals to me as a lifelong programmer. I’d rather program than prompt, as comfortable as I am with verbally expressing myself. It’ll be interesting to see if that kind of approach (programming over prompting) wins out over more direct prompt engineering approaches such as used within Langchain.

Why add the first step to a typical RAG setup? Because it generates queries that potentially work better than however you might otherwise construct queries for the retrieval stage of retrieval-augmented generation.

More interesting to me is the last step, where the output is intended to be a set of labels, not a few paragraphs of a response to a question.

In extreme multi-label classification (XMC) you are generating structured output, pinned to a taxonomy, and this makes it much more likely you’ll get the kind of answers you want. There are ways of structuring and validating the answers a genAI system provides, for example the Instructor library which makes it easy to use the Pydantic Python library to validate prompt output. You don’t have to say in your prompt, “please please please output in json and don’t use elements other than those I’ve provided in the taxonomy.” Instructor makes it do it (or errors out).

If you were to build a system like this for your first GenAI foray—rather than a chatbot—you’d be far less likely to lead your users astray. And you can use the output in many different ways (summarizing a candidate’s skillset, using it in a job-talent match algorithm, listing most important skills for a job description, etc).

the pieces of a RAG system

The basis of any RAG system is its ability to retrieve relevant information to pass to the LLM in a prompt.3 This is why vector databases are so hot these days, because they can store the embeddings (numeric vectors representing text) and make it very easy to search for near neighbors. For your vector database, you might consider using grandmama of search Elasticsearch (or AWS’ Elasticsearch offshoot Opensearch), a dedicated new-fashioned vector database like Pinecone, or, for more lightweight use, a library like Faiss.

You also need to be able to engineer (or program) and manage your prompts: consider Langchain / Langsmith, DSPy, or something else, maybe from one of the many startups working in this space? This is an area of active research and development that I’m digging into now.

so what?

A chatbot is the most obvious way of using ChatGPT and its kin. You can improve upon chatbots by using RAG, but you can also do more interesting tasks like extreme multilabel classification using similar approaches that combine retrieval with generation. Getting structured data out provides the basis for many interesting features, way beyond what you can do with blobs of generated text. That’s safer, and, to me, way more interesting.

Anne Zelenka is co-founder of Incantata AI. She also serves as Head of AI and Analytics at The Mom Project, a talent marketplace aimed at creating economic opportunity for moms and everyone else who wants a better work-life balance. These opinions are her own and do not represent those of TMP.

Embeddings are numeric vectors representing text (or other entity) in multidimensional space. You can find text with similar meanings by encoding the text of your query as an embedding then finding embeddings in your vector store that are near in multidimensional space. This is the basis of semantic search, many recommender systems, and retrieval for RAG.

Some people think enhancing LLMs with retrieval i.e. RAG is just a temporary patch until LLMs can deal with much longer input prompts. Google’s announcement of Gemini 1.5 Pro with a 10M token context length led to suggestions that RAG soon won’t be necessary. For a variety of reasons, I don’t think this is the case. But I’m keeping in mind that possibility.

RAG doesn’t have to retrieve embeddings but it often does. It can also retrieve information from a SQL database, from a search engine that indexes free text, or from any other kind of datastore.