Nvidia reported crazy good results this past week, better than already-optimistic forecasts. For the fourth quarter, they reported revenue of $18.4 billion for their data center business (which includes sales of GPUs typically used for AI) compared to just $3.62 billion in the same quarter the year before. For the entire fiscal year, Nvidia saw revenue of almost $61 billion compared to just $27 billion the year before, and their gross margin jumped to 72.7% from 56.9%.

How can this be happening? Is it real or some sort of bubble? Maybe both. The Nvidia financial results are real, but it’s driven by a bubble in AI investment on the part of big tech companies.

Across news articles and social media, comparisons are being made to dot-com era Cisco. Cisco saw its market cap peak during the dot-com bubble at a value of almost $560 billion. It is worth just $197 billion now.

But Nvidia’s price to expected 2024 earnings is now just around 32, compared to Cisco’s multiple of more than 150 times forward earnings in March of 2000, its dot-com era peak.

Nvidia bulls argue that Nvidia is cheap, and the results are real:

Nvidia’s success has not been about hype at all, but about actual commercial success and growth that is just beyond most people’s imagination, and there is no sign of that slowing down.

But this bubble is different than the dot-com era bubble when investors piled in expecting incredible revenue and earnings later. Nvidia’s lower forward PE ratio compared to Cisco in the dot-com era reflects where the real FOMO is occurring: in big tech company executive suites and boardrooms. Meta, Microsoft, Oracle, Amazon, Tencent and other big companies are investing in Nvidia GPUs hoping for a big payday when AI innovation starts producing real end-user value.

where is all this revenue coming from?

Who is buying all of Nvidia’s chips, to boost their revenue so much in such a short time? Big tech companies who want to win the GenAI space.

Microsoft and Meta are the buggest buyers of the H100 chip, which costs $30,000 each and powers generative AI model training and deployment, among other uses. In 2023, these two mag 7 members each spent $4.5 billion on H100 chips. $19B (70%) of Nvidia’s 2023 revenue of about $27B came from 12 big tech companies:

Meta says it will have 350,000 H100s and almost 600,000 total H100 compute equivalent GPUs by the end of this year. So this is a bubble that is not driven simply by investors chasing the hottest company around. The earnings themselves are driven by big techco FOMO. The fact that Nvidia’s current price is “only” a little bit above 30 times forward earnings doesn’t mean this isn’t a bubble. The stock price is reflective of the bubble in big tech spending on GPUs.

These big techcos are investing not because they are making lots of money from companies using GenAI effectively but rather because they want to establish a beachhead in GenAI and win out over their competitors. They expect that spending on AI from companies building for actual end users will be so vast that they’ll make good a return on this investment.

But we’re not seeing companies that use AI improve their earnings based on it or even offer concrete plans for doing so other than hand waving AI boosterism.

David Cahn writes at Sequoia Capital:

While investors have extrapolated much from Nvidia’s results—and AI investments are now happening at a torrid pace and at record valuations—a big open question remains: What are all these GPUs being used for? Who is the customer’s customer? How much value needs to be generated for this rapid rate of investment to pay off?

Big cloud vendors are investing heavily in GPUs, but eventually this needs to pay off. Cahn’s calculations and assumptions suggest a gap of $125B between what these vendors can reasonably expect to earn off of their expected investments in Nvidia GPUs this year and what they need to achieve to break even, much less make a decent return over and above what they could have made investing in other things.

Optimistically, Cahn says his VC company is looking for the startups that are going to leverage AI technology enough to create real end-customer value. No one knows what such companies might look like, which is not to say they won’t exist some day.

Cahn notes what happens in cycles like this, where infrastructure gets built out in advance of bona fide revenue-generating use cases:

During historical technology cycles, overbuilding of infrastructure has often incinerated capital, while at the same time unleashing future innovation by bringing down the marginal cost of new product development. We expect this pattern will repeat itself in AI.

That’s good for the end consumers of AI and for companies that leverage AI once the marginal cost comes down, but not so good for Nvidia or for the big tech companies who are burning up capital.

return on investment for AI/ML

I don’t think that we’re going to see startups fill the $125B gap that Cahn identifies any time soon. Instead, I think we’re going to see capital burning, GPU shortages turn into a GPU glut, and cloud companies pull back their data center spending as the AI space tries to find its way towards useful and cost-effective innovation.

Let’s take a look at the micro-level of what’s occurring in AI/ML development:

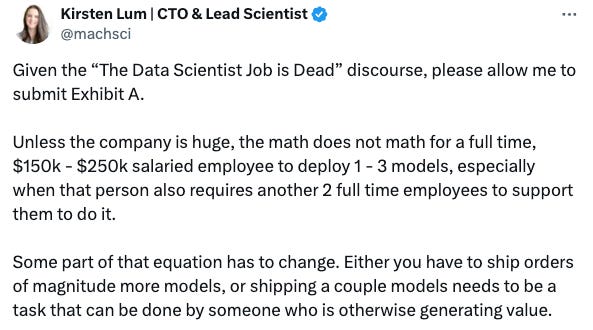

In my experience, this is largely true. Data science teams (of the AI/ML sort not the “advanced analytics” or A/B testing sort) are not making amazing ROI on their efforts, and GenAI doesn’t make this any easier, even if it does open up some new areas of possibility. Perhaps GenAI does mean you can do natural language natural language programming, but building a useful NLP system that impacts business outcomes is still really hard whichever way you do it. Besides that, now some people think that you should be programming not prompting foundation models. So far, “AI” is not that much different than data science and ML work that has come before. The work required and the payoffs are similar.

Simply deploying a machine learning model (or a chatbot) isn’t a surefire way to produce value with AI/ML. In isolation a machine learning model’s outputs generally don’t provide value. Instead, you must build features and systems around models. This often involves standing up a retrieval system alongside your GenAI prompting, and almost always requires laboriously collecting and curating labeled datasets. These labeled datasets are critical for finetuning foundation models, for incorporating more classical machine learning capabilities into your pipelines, and, always, for evaluating your models and systems. Ah yes, you can use GenAI to generate labels, that’s true. But that’s not a hundred-billion-dollar opportunity.

Instruction-tuned foundation models like ChatGPT and its brethren do provide productivity improvements in a few areas:

Coding assistance — but not entirely replacing coders as someone has to figure out what to build and how to architect it. Generative chat models aren’t at that level yet and may not be for decades (this is like self-driving vs driver assistance).

Producing and editing written content — but only in a derivative way. The best writing on the Internet is not going to be AI-generated.

Question answering that substitutes for search — it’s often easier and more accurate to ask ChatGPT about something than to issue a Google search. This isn’t a huge productivity improvement though. The human asking the question still has to (1) decide what to ask and (2) do something with the answer. They also are at risk of getting incorrect information due to foundation model hallucination.

On the image and video processing side, there are similar productivity advances to be expected.

However, none of these things are likely to generate $125B in revenue in the next few years. For comparison, the entire global SaaS market size is estimated at around $200B to $300B right now, and that’s across all the different verticals and functions that SaaS systems can support.

killer AI?

What might a killer AI use case look like? Not this:

This describes a feature that a podcast service could offer not a killer AI product. That’s what most AI-based offerings are right now: ways to improve upon existing services, not transformative businesses and business models that will make frantic over-investment in GPUs worth it.

when will the bubble pop? maybe in 2025

Typically bubbles go further and last longer than anyone expects, and that will probably be the case for big tech companies spending on AI infrastructure, benefiting Nvidia, and making buying its stock look like a no-brainer.

But the Nvidia customers are already facing financial pressures. Let’s take Meta as an example. Meta saw its revenue growth accelerate through 2023, mainly driven by Chinese e-commerce marketplace spending. Meta is still burning capital on expansion of Reality Labs (the metaverse). Meta’s operating margin is under pressure, but they’re holding it up by reducing headcount, which can only go so far. Operating margin will likely decrease further as they increase their investment in AI (both GPUs and on building their own AI foundation models). They aren’t likely to see improved results based on that investment in the near future or any time at all. At some point investors are going to note that the spending isn’t leading to improved financial results, as happened with metaverse spending.

Just based on what happened in the dot com boom and bust, and in the 2007-2008 financial crisis (with roots in the subprime mortgage boom of 2004 to 2006), it seems that bubbles play out in periods of two to five years. Looking back to tulip mania, the acceleration started in 1634 and then collapsed in 1637. AI hype started in late 2022 with the announcement of ChatGPT, and accelerated in the latter half of 2023 and into the first part of this year. This year it should continue, and probably into 2025. I predict an AI-led crash and AI winter in 2025, affecting the big tech companies spending the most and Nvidia too.

Until then, let’s party 🥳

Anne Zelenka is co-founder of Incantata AI. She also serves as Head of AI and Analytics at The Mom Project, a talent marketplace aimed at creating economic opportunity for moms and everyone else who wants a better work-life balance. These opinions are her own and do not represent those of TMP.